On Online Content Moderation

- feb 5, 2025

Some written work

This essay was part of my “Professional Skills” module at uni. The task was to write a sub 2k word essay on any IT/CS topic we liked. While most people went for topics like blockchain, AI, or their favorite programming language, I decided to scar myself by learning about filthy work that keeps our doom scrolling beheading free (unless you use instagram reels). Read at your own digression - topics include self harm, torture, serious bodily harm, traumatic events, and exploitation of the 3rd world.

Introduction

Commercial content moderation (CCM) is the business of vetting user-generated content (UGC), usually within the social media ecosystem. It exists to ensure content hosting platforms that use their services are compliant with regulations, end-user agreements, content guidelines and social acceptability. Although a young industry, it has closely matched the growth of global social media sites, providing an essential service for user experience avoiding legal roadblocks in operation.

UGC hosted on the largest global social media sites must conform to many regulations, notably those of the US, EU and Germany. These laws are generally not enforced locally, but instead outsourced to content moderators in the periphery countries. Western social standards and the laws that support them are complied with in offices in the Philippines, Nairobi and Tulu. Common names given to this type of labourer include “digital detoxifier” [1] and “virtual captive” [2].

Alternatives to the use of CCM include machine learning algorithms to identify UGC that should be removed from platforms, but due to the difficulty of creating such systems, their effectiveness is not high and machine learning models are notorious for not providing explanations for the decisions they make. Human moderation is needed as long as automated systems still miss some amount of material or need to support appeals. Platform owners want to avoid users seeing objectionable content, hence design deliberately vague content guidelines to give freedom to moderators to avoid hosting content that some users may find offensive[3][4]. The criticism found in articles hosted by established media companies’ own websites may not be allowed on social media sites that focus on user-generated content. Content that exposes the crimes of governments and corporations is often misidentified and hence removed accidentally [5], or even deliberately to comply with local content laws [6].

This essay covers why CCM exists, the nature of moderation work and its implications, in order to make clearer the hidden costs, both human and financial, of hosting UGC online. It also discusses the link between platforms and their content moderation operations, with Facebook as the main example.

The need for moderation

Content-sharing platforms aim to keep user retention high for various reasons, and this requires providing a positive experience that does not discourage return traffic.

Users are unlikely to revisit a platform if they see content that is offensive or highly graphic, hence customer experience must be designed to avoid showing such content, be it in the form of images, video or text.

What has allowed platforms to reach their current popularity, with 4.59 billion people registered on social media platforms in 2022, is the fact that most popular services do not need their posts to be vetted by humans before they are shared [7]. But there remains the problem of disturbing or illegal content being shared through these platforms.

Who Moderates the Internet?

Outsourcing labour is a common practice in the technology sector, with outsourcing valued at US$526.6 billion in 2021, and it is expected to grow at over 4% per year[8]. According to some researchers, cross-border data flows are now more important than the flow of goods. Though usually performed to cut costs when a company is experiencing reduced revenue [9][10], it is particularly favoured when the task being outsourced is very well-defined. The nature of determining whether content is acceptable or not requires moderate education and some technical understanding of computer systems, hence the job is mostly performed by workers in countries such as the Philippines, Kenya and Uganda to leverage wage arbitrage.

The form of outsourcing used for CCM is microservices work, a form of on-demand workforce for completing batches of well-defined small tasks. This is generally considered part of the modern gig economy, although CCM is one of the original knowledge work areas that predates the current conceptions of the gig economy, which is generally seen as physical work [11]. In the case of CCM, most work is through a platform, generally characterised by a lightweight technology company with few conventional employees but connects clients that need small tasks completed to a huge reserve of labourers registered with the service, taking a cut of the listed price of the service. The service of categorising content as acceptable or not for a platform can be well-defined, sectioned into blocks of work, decoupled from the rest of the business, be performed anywhere, and be completed by temporary workers with little to no training.

The overwhelming majority of demand for online gig-style labour is in the global north but most of the supply is in the global south [12].

Working Conditions

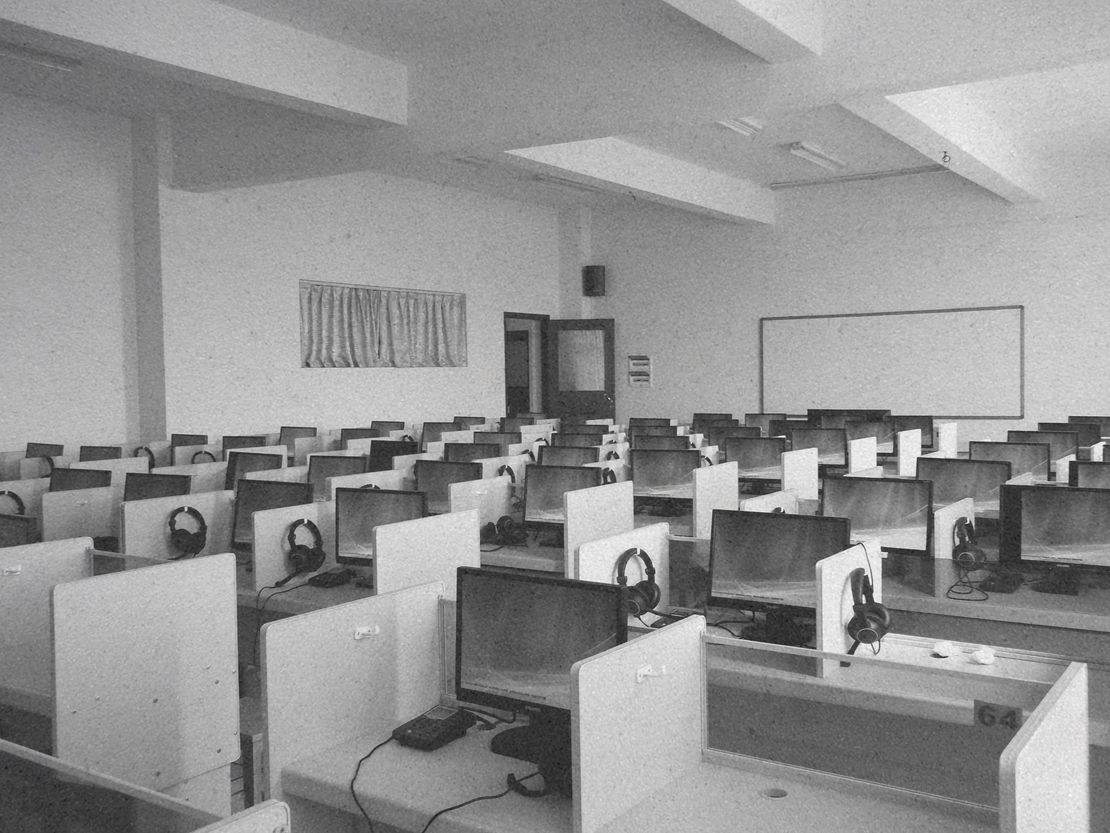

The labour process is highly refined, with workers logging into a system and being presented with a queue of content to moderate. They request a batch of content from the queue and then, via hotkeys or verbally stating the classification, they categorise into acceptable or a specific policy violation. For video content often the systems used present only a handful of still frames, rather than the full video, in an attempt to reduce the mental effects of viewing disturbing content. Despite this, still frames can nevertheless be traumatising, and many workers report inadequate mental health services, with the provided support often being in the form of “wellbeing coaches”, rather than professional councilors. Some of the content that they are exposed to ranges from Child Sexual Abuse Material (CSAM) to gang torture videos, and usually each worker is assigned to a specific category, for example one may specialise in ISIS beheadings and another in rape clips. It is not uncommon for certain categories, e.g. pornography to be further outsourced to another location under a separate contract, and for the most sensitive content, especially when political in nature, or when involving conflict zones, to be dealt with by workers in the west or under in-house oversight.

Moderation Policy

Although platforms’ public moderation policies are intentionally vague, the inward-facing moderation policies in CCM companies are often very specific and well-defined. Workers’ own moral and ethical views are generally “superseded by the mandates and dictates of the companies that hire them” [4]. Not only is the content shown to them often traumatising, but they have little agency in their moderation decisions, instead acting as more of an automaton, simply comparing content against the list of policy violations recognised by the company employing them.

To many moderators, the collection of content types deemed harmful seems arbitrary and incomplete. For example, veteran CCM researcher Sarah T. Roberts, who coined the term CCM, found that many workers disagree with the policies they must adhere to. An employee at one moderation company expressed concerns that his company policy does not consider blackface hate speech [4], yet he feels ignored after bringing it up with management. “They never changed the policy but they always let me have my say …” A surprising entry seen in Facebook’s 2012 guidelines reads “Crushed heads, limbs etc are OK as long as no insides are showing."[13]

Platform Power

Of equally serious concern is the power platforms hold in movements for social justice and in their ability to sway public sentiment by selectively, intentionally or not, removing certain content. While YouTube for example is reasonably uncontroversial in its decision to waive its graphic content policies for sharing educational medical content, the hosting of videos showing police brutality has major implications. The sharing of the video of the police shooting of

Tamir Rice may have helped the attempt to convict the officers involved [14][15], as the details in the video directly contradict the statement made by the officers. In this instance, the platform aided the attempt to bring representatives of a large institution to justice, but just as easily a platform can decide to remove similar shocking content.

Facebook came under fire in 2016 for removing an image of ’Napalm Girl’ [16], a Pulitzer Prize-winning picture of a naked Vietnamese girl whose back was burned by napalm in a friendly fire incident [17], and widely used in anti-war iconography. Moderators working indirectly for another platform were told to remove pictures of torture taken in the infamous Abu Graib prison [5], these examples beg the question of whether platforms fall into aggressively moderating similar posts deemed to have anti-government sentiments so to avoid legal repercussions. As social media sites are generally profit-seeking, another conflict of interest could arise from the scenario of a company that is being criticised in a social media post paying for advertising on the very same site.

Responsibilities (a lack of)

Sama, previously Samasource, is a data annotation company that was originally founded as a non-profit but is now structured as a B Corp, which is a specific certification granted to companies that function with social good in mind. It primarily functions as a platform that provides micro-services work to previously unempowered demographics [18], by operating fulfillment centres in Nairobi, Kenya, Kampala, Uganda and Gulu, Uganda. Prominent clients include Google, Facebook, Microsoft and General Motors.

Despite the gleaming credentials, Sama has been the subject of multiple controversies over worker conditions and labour rights. One instance relates to a 2019 contract Sama held with Facebook to moderate content for the Sub-Saharan Africa region. Focused on the Nairobi office, a Time investigation found that “the workers … are among the lowest-paid workers for the platform [Facebook] anywhere in the world, with some of them taking home as little as $1.50 per hour”[19]. Sama was alleged to have committed an illegal firing of an employee attempting to unionise his office after an unsuccessful attempt to organise a strike over working conditions. The case was taken on by the London-based legal NGO Foxglove, which is crowdfunding the case’s legal fees [20].

Three years after his firing, Daniel Motaung launched his legal case, asking that the court enforce several reforms to Facebook’s working conditions for outsourced labourers, including proper mental health support, pay matching Facebook’s in-house moderators and the end to illegal firings and union busting within Sama [21]. Soon after the case was launched Facebook and Sama unsuccessfully attempted to “…impose a gag order on Daniel, Foxglove and his legal team.” as reported by TIME [22]. Facebook has since axed the contract, along with Sama making all 260 moderators redundant [23]. According to a recruiter at Facebook’s replacement supplier Majorel “… they will not accept candidates from Sama, it’s a strict no”. In an attempt to remove its responsibility from the Motaung case, Facebook requested to be removed from the case because the defendant is Sama, which was struck down by the ruling of a Kenyan judge that “Facebook is a “proper party” in Daniel Motaung’s legal case."[24] These attempts by Facebook to escape the repercussions of issues related to the contract it created shows that platforms are distanced legally from the labour they are paying for.

Hashes and CSAM

Removal of harmful content once identified is a relatively non issue. For instance forms of non-cryptographic hashing such as fuzzy, locality-sensitive and perceptual hashing are the industry standard techniques for detecting images and videos that have been deemed to violate use policies [25]. These methods involve using special hash functions with outputs that preserve some level of similarity between modified copies of the content, e.g. after cropping an image or applying a filter. A check can be performed on content as it is uploaded against a database containing the hashes of banned content before it is allowed on a platform.

Usually, it’s up to each platform to maintain its own list, but for some content such as Child Sexual Abuse Material (CSAM) global databases are available [26]. Digital Rights Management (DRM) companies offer similar services that provide hashes of content that they manage, to ensure copyrighted content is not shared freely. A version of this technology powers YouTube’s Content ID system [27].

AI won’t save us

Solutions that use neural networks to identify bad content are becoming common with the shrinking cost of training and deploying machine learning models, but they have their flaws. Scalability is one of the fundamental factors in the success of many technology companies, and it is no different for content-sharing platforms [28].

The rapid growth that so often characterises the sector is only possible if the cost of providing service grows slower than linearly with the number of users. Therefore huge investments have been made in the development of automated systems that would mostly replace human moderators.

Computer vision is a quickly growing field of AI research, with various implementations of object detection networks able to identify the subject of images it is trained on with great accuracy, but the quality of the model is only as good as its training data. For example types of harmful content that are not included in the model will not be identified with high accuracy, and anything missed by the automated system needs human judgement. There is also the issue that most data sets used will naturally not include much content from minority groups and those who are not represented well on the Internet. Groups of activists involved with reporting harmful online content coming out of the Ethiopian civil war report content directly to Facebook’s human rights team rather than using the platform’s reporting system [29]. “The reporting system is not working. The proactive technology, which is AI, doesn’t work,” one activist said. Forgetting biased or insufficient data sets for one moment, even if a perfect machine learning model can exist, it must be trained using labelled data, and many of the same companies that provide CCM services also provide dataset labelling services. For example, Sama recently made the shift to only providing machine learning training services, including data labeling, for the likes of OpenAI’s ChatGPT [30].

Conclusion

While platform owners are concerned with maximising user retention and hence minimising the spread of content that could discourage platform use, the work of curating content is obfuscated to provide a (false) image of a site free from potentially harmful material. Similar to how consumers of expensive technology that is a staple in western lifestyles think very little about the workers who make their devices, little thought is given to those who curate their online experiences. While preserving poor working conditions, this lack of public attention is juxtaposed by an enormous number of viewpoints on censorship online, which is simply another part of CCM.

The future of content moderation looks to be uncertain, but is likely to directly impact the lives of the majority of people on the planet, and indirectly all others in its power to influence legislation in even the most disconnected parts of the world. Labour unions representing moderators are beginning to appear in countries with favorable worker protections such as Germany [31], and the increasing volume of research, both from academic journals and journalism in general shine a light into a previously obscured industry. The work that moderators do is challenging both technically to ensure correct decisions, and mentally, given possible exposure to some of the worst imagery and text imaginable, coming from all over the world. While many are locked into non-disclosure agreements, barred from speaking of their work, we all experience it when we access an internet freer from hate and harm.

References

[1] R. Trenholm. The cleaners documentary crawls into the scary side of facebook. https://www.cnet.com/culture/ entertainment/the-cleaners-sundance-documentary-review dirt-on-social-media-fake-news/. (accessed: 19.03.2023).

[2] MicroSourcing International Inc. Virtual captives philip pines — microsourcing (archived 3rd october 2011). https://web.archive.org/web/20111008025739/http: //www.microsourcing.com/virtual-captives.asp. (accessed: 28.03.2023).

[3] S. Kopf, “Corporate censorship online: Vagueness and discur sive imprecision in youtube’s advertiser-friendly content guide lines,” New Media & Society, 2022.

[4] S. T. Roberts, “Commercial content moderation: Digital labor ers’ dirty work,” Media Studies Publications, vol. 12, 2016.

[5] B. Bishop. The verge: The cleaners is a riveting documen tary about how social media might be ruining the world. https://www.theverge.com/2018/1/21/16916380/sundance 2018-the-cleaners-movie-review-facebook-google-twitter. (accessed: 29.01.2023).

[6] J. Mchangama. Rushing to judgment: Examining government mandated content moderation. https://www.lawfareblog.com/ rushing-judgment-examining-government-mandated-content moderation. (accessed: 28.03.2023).

[7] Statista. Number of social media users worldwide from 2017 to 2027 (in billions). https://www.statista.com/statistics/ 278414/number-of-worldwide-social-network-users/. (accessed: 26.03.2023).

[8] Mordor Intelligence. It outsourcing market - growth, trends, covid-19 impact, and forecasts (2023 - 2028). https://www.mordorintelligence.com/industry-reports/it outsourcing-market/. (accessed: 26.03.2023).

[9] P. A. Strassmann. Most outsourcing is still for losers. https://www.computerworld.com/article/2574816/most outsourcing-is-still-for-losers.html. (accessed: 29.01.2023). [10] L. P. W. Mary C. Lacity, Shaji A. Khan, “A review of the it outsourcing literature: Insights for practice,” The Journal of Strategic Information Systems, vol. 18, no. 3, pp. 130–146, 2009.

[11] G. P. Watson, L. D. Kistler, B. A. Graham, and R. R. Sinclair, “Looking at the gig picture: Defining gig work and explaining profile differences in gig workers’ job demands and resources,” Group & Organization Management, vol. 46, no. 2, pp. 327–361, 2021.

[12] International Labour Organisation. 2021 world em ployment and social outlook report. https://www.ilo. org/wcmsp5/groups/public/—dgreports/—dcomm/— publ/documents/publication/wcms 771749.pdf. (accessed: 26.03.2023).

[13] A. Chen. Inside facebook’s outsourced anti-porn and gore brigade, where ’camel toes’ are more offensive than ’crushed heads’. https://www.gawker.com/5885714/inside-facebooks outsourced-anti-porn-and-gore-brigade-where-camel-toes-are-more-offensive-than-crushed-heads. (accessed: 27.03.2023).

[14] cleveland.com. Video of cleveland police shooting of tamir rice raises disturbing questions: editorial. https://www.cleveland. com/opinion/2014/11/video of tamir rice shooting b.html. (accessed: 27.03.2023).

[15] News 5 Cleveland. Full video: Tamir rice shooting video. https://www.youtube.com/watch?v=dw0EMLM1XRI. (accessed: 27.03.2023).

[16] Z. Kleinman. Fury over facebook ’napalm girl’ censorship. https://www.bbc.co.uk/news/technology-37318031. (accessed: 27.03.2023).

[17] Unknown. Girl, 9, survives napalm burns. https: //www.nytimes.com/1972/06/11/archives/girl-9-survives napalm-burns.html. (accessed: 27.03.2023).

[18] Sama. Our impact. https://www.sama.com/impact/. (ac cessed: 30.01.2023).

[19] TIME. Inside facebook’s african sweatshop. https: //time.com/6147458/facebook-africa-content-moderation employee-treatment/. (accessed: 30.01.2023).

[20] L. Group. Foxglove legal. https://luminategroup.com/investee/ foxglove. (accessed: 03.02.2023).

[21] Foxglove. Daniel motaung launches world-first case to force facebook to finally put content moderators’ health and well being ahead of cash. https://www.foxglove.org.uk/. (accessed: 03.02.2023).

[22] TIME. Facebook cracks whip on black whistleblower. https://time.com/6193231/facebook-crack-whip-black whistleblower/. (accessed: 03.02.2023).

[23] Foxglove. Mark zuckerberg – reverse the mass sacking of your kenya content moderators. https://www.foxglove.org. uk/campaigns/mark-zuckerberg-reverse-the-nairobi-sackings/. (accessed: 27.03.2023).

[24] ——. Facebook tried to dodge former content mod erator daniel’s case in kenya – and failed. https: //www.foxglove.org.uk/2023/02/06/facebook-tried-to-dodge former-content-moderator-daniels-case-in-kenya-and-failed/. (accessed: 06.02.2023).

[25] R. Gorwa, R. Binns, and C. Katzenbach, “Algorithmic content moderation: Technical and political challenges in the automa tion of platform governance,” Big Data & Society, vol. 7, no. 1, 2020.

[26] INHOPE. A deep dive into iccam. https://inhope.org/EN/ articles/a-deep-dive-into-iccam. (accessed: 28.01.2023).

[27] YouTube. How content id works. https://support.google.com/ youtube/answer/2797370?hl=en. (accessed: 28.03.2023).

[28] N. Srnicek, Platform Capitalism. Polity Press, 2017.

[29] N. Robins-Early. How facebook is stoking a civil war in ethiopia. https://www.vice.com/en/article/qjbpd7/how-facebook-is stoking-a-civil-war-in-ethiopia. (accessed: 28.03.2023).

[30] TIME. Exclusive: Openai used kenyan workers on less than $2 per hour to make chatgpt less toxic. https://time.com/6247678/ openai-chatgpt-kenya-workers/. (accessed: 28.03.2023).

[31] Foxglove. Breaking the code of silence: what we learned from content moderators at the landmark berlin summit. https://www.foxglove.org.uk/2023/03/15/building-worker power-in-social-media-content-moderation-our-thoughts-on the-landmark-moderators-unite-summitin-berlin/. (accessed: 28.03.2023).